Site issues on July 2nd, 2012

What a day! July 2nd seemed to go from sunshine to rain in a couple of hours. The good news is: we’re back. Let’s first go through what happened…

What happened?

This morning, you've probably received an e-mail stating that "Your Free subscription at MyGet has expired". Free? Expired? Even we were puzzled! We’ve quickly sent out an e-mail stating free subscriptions don’t expire and that we were investigating the issue. In our rush to find out what happened, we even forgot to remove some template text from that e-mail. Professional of us, really.

This morning, you've probably received an e-mail stating that "Your Free subscription at MyGet has expired". Free? Expired? Even we were puzzled! We’ve quickly sent out an e-mail stating free subscriptions don’t expire and that we were investigating the issue. In our rush to find out what happened, we even forgot to remove some template text from that e-mail. Professional of us, really.

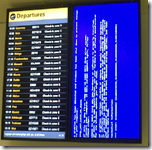

A couple of hours later, the website started acting funny: one moment it was up, one moment it appeared down. The strange thing was: we did not see this happening in our server monitoring page. The brand new Windows Azure portal was all shiny and bright, all systems running. A short glitch? Probably.

And then 4:00 PM (GMT+1) came around: we started to receive SMS messages from Pingdom. UP, DOWN, UP, DOWN. Some sweaty hours later, we’ve found the root cause of all this mess and shortly thereafter, deployed a hotfix to the cloud. What a day!

All-in-all, we’ve spammed you in the morning, had some small glitches during the day and went bezerk between 4:00 PM (GMT+1) and 7:00 PM (GMT+1).

What was wrong?

During the entire day, we were suspecting two of our latest changes. First of all, we’ve moved storage accounts in Windows Azure to make use of some of the new features in there with regards to mirroring packages. The code isn’t done yet, but we did already switch the storage accounts. Next to that, we’ve recently deployed our site using ASP.NET MVC 4 (RC). Was any of this the cause? We’ve been searching… But no.

Elmah revealed some interesting details. We were getting throttled on those new storage accounts. Was this due to the fact that we enabled storage analytics? We’ve disabled them and found that we were back up in full glory! For a moment, because this wasn’t the reason we were being throttled…

We process several operations asynchronously, such as updating our caches. It seemed that there were 16.000 commands in there, all telling the system to update the cache for one specific feed. One of our newest MyGet members managed to break a couple of gears there! Something we don’t judge. In contrary: there’s no better stress test or user test than real users.

A rookie mistake

Now why were there 16.000 messages unprocessed and why were some of them on the dead-letter queue? And why were our machines looking fine (all green!) while the website did not respond? The answer lies in the following piece of code:

1 [DebuggerDisplay("{Title} Version={Version}")] 2 public class FeedPackage 3 : TableServiceEntity 4 { 5 public string Id { get; set; } 6 public string Version { get; set; } 7 8 // ... 9 10 public override bool Equals(object obj) 11 { 12 if (ReferenceEquals(null, obj)) return false; 13 if (ReferenceEquals(this, obj)) return true; 14 if (obj.GetType() != typeof(FeedPackage)) return false; 15 return GetHashCode() == obj.GetHashCode(); 16 } 17 18 public override int GetHashCode() 19 { 20 int hashCode = 7; 21 22 if (PartitionKey != null) hashCode ^= PartitionKey.GetHashCode(); 23 if (RowKey != null) hashCode ^= RowKey.GetHashCode(); 24 25 return hashCode; 26 } 27 }

Did you spot it? We’ve gone with a complete rookie implementation of Equals and GetHashCode there…

The devil is in the fact that we’re using the hashcode of package A and compare it with package B. That’s a serious problem, as those hash codes are based on the hashcodes of their package identifier and package version. Guess what MSDN tells us…

If two string objects are equal, the GetHashCode method returns identical values. However, there is not a unique hash code value for each unique string value. Different strings can return the same hash code.

Our newest member was in the position where he had a lot of packages uploaded to MyGet and by coincidence, two packages appeared to be “equal” using our faulty code above.

Now why did the website choke on that?

Windows Azure table storage, which we use to store all your data, In the .NET space, the WIndows Azure storage client uses WCF Data Service to fetch data. Deep in that framework, the Equals'() method is called on every entity to check whether a specific entity is already being tracked by the data context in use. And since we had a duplicate result for Equals(), an unhandled exception was thrown there.

Wait a minute: you guys don’t catch unhandled exceptions? Yes we do. And when such exception occurs, we retry some operations for up to five times. Retry operations? Well, rather than failing immediately, a good pattern is to retry a failing operation to check that the error wasn’t just a minor glitch like a network fault. On Windows Azure, it is recommended to do this for calls to any external system, like a webserver calling storage. Check David Aiken’s blog for some info on this.

So, retries… Yes. Retry an I/O operation with minor latency for five times. Have a user who’s experiencing an issue with the website F5 a couple of times. And have that queue with 16.000 operations pending hammer this storage account again. What will happen? Throttling. Windows Azure tells our system to retry again after a second. And our retry logic does exactly that. This retry logic causes some threads to sleep, all the queue processing and F5-ing causes some more thread to sleep and what happens? The server comes to a halt while it still looks okay to our external monitoring.

Are we running just one server? No. We’re running multiple, in a round-robin load-balanced farm. This means that all this refreshing impacted all of our servers, making the site as slow as a snail. And recover again. And go almost down again…. and come up again. Exactly what we’ve been seeing today.

What are we doing to prevent this from happening in the future?

The truths of offering a cloud service are: hardware fails, software has bugs and people make mistakes. Hardware failures are mitigated by the Windows Azure system. Software bugs? It seems we’re proficient at that. And yes, that was a giant mistake of us. Our job is to mitigate all of these and provide a reliable, robust service to you. We’ll be using the lessons learned today to improve our service, your service.

First of all, we’ve fixed our rookie mistake. This should have never happened and we’ll make sure that our code base is checked and repaired for faulty Equals / GetHashCode implementations.

Next, we’ll be reviewing our retry logic. Blindly retrying on any exception type isn’t the smartest thing to do, so it seems. We’ll be making sure the retry logic only fires on transient exceptions and not blindly on any exception that occurs.

We’ll also be investigating additional monitoring. We’re not sure yet about possible tools or services there, we do want to know when something is wrong and our CPU is heating the entire datacenter at 100%.

We're sorry!

Happy packaging!

The MyGet team